Introduction

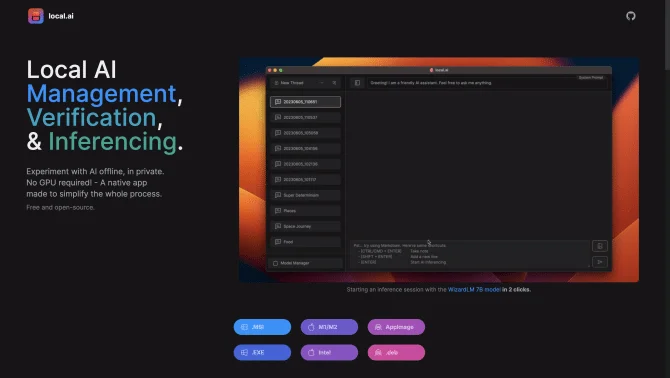

The Local AI Playground is a native application designed to streamline the process of experimenting with AI models on local machines. It provides a user-friendly interface that allows for offline and private AI tasks, eliminating the dependency on high-end hardware such as GPUs. With features like CPU inferencing, GGML quantization, and a centralized model management system, this tool is accessible to a wide range of users, from students to data scientists. The application is built with a Rust backend, ensuring memory efficiency and a compact size across various platforms.

background

Developed as an open-source project, Local AI Playground has been gaining traction among the AI community for its ability to simplify complex AI tasks. The project is hosted on GitHub, which allows for community contributions and continuous improvement. The tool's development is driven by the need to make AI experimentation more accessible, focusing on usability and efficiency.

Features of Local AI Playground

Core Feature/Product Feature 1

Free and open-source, ensuring accessibility and community-driven development.

Core Feature/Product Feature 2

Memory-efficient Rust backend, compact in size (<10MB on various platforms).

Core Feature/Product Feature 3

CPU Inferencing, adaptable to available system threads for diverse computing environments.

Core Feature/Product Feature 4

Support for GGML quantization with options q4, 5.1, 8, and f16.

Core Feature/Product Feature 5

Centralized model management with resumable and concurrent downloading.

Core Feature/Product Feature 6

Usage-based sorting for efficient model organization.

Core Feature/Product Feature 7

Robust digest verification using BLAKE3 and SHA256 algorithms for model integrity.

Core Feature/Product Feature 8

Quick start of a local streaming server for AI inferencing with minimal setup.

How to use Local AI Playground?

Begin by installing the Local AI Playground application, either through Docker or by following the instructions on the official GitHub repository. Configure your environment to match your hardware specifications. Download and manage AI models through the centralized model management system. Start the local streaming server and begin experimenting with AI inferencing using the provided UI.

FAQ about Local AI Playground

- Frequently Asked Question Description 1

- How do I install Local AI Playground?

- Frequently Asked Question Answer 1

- You can install Local AI Playground via Docker or by following the setup instructions on the GitHub repository.

- Frequently Asked Question Description 2

- What types of AI models are supported?

- Frequently Asked Question Answer 2

- Local AI Playground supports models that are compatible with the GGML format and quantized using q4, 5.1, 8, or f16.

- Frequently Asked Question Description 3

- Do I need a GPU to use Local AI Playground?

- Frequently Asked Question Answer 3

- No, Local AI Playground is designed for CPU inferencing and does not require a GPU.

- Frequently Asked Question Description 4

- How can I manage AI models within the application?

- Frequently Asked Question Answer 4

- You can manage AI models using the centralized model management system, which supports resumable and concurrent downloads.

- Frequently Asked Question Description 5

- What is the process for starting a local streaming server for AI inferencing?

- Frequently Asked Question Answer 5

- Once installed, you can start the local streaming server with just two clicks, as per the quick inference UI provided.

Usage Scenarios of Local AI Playground

Usage Scenario 1

AI enthusiasts and researchers can use Local AI Playground to experiment with various AI models without the need for technical complexities.

Usage Scenario 2

Developers can leverage the tool for integrating AI capabilities into their applications without financial constraints.

Usage Scenario 3

Data scientists can manage and experiment with AI models efficiently, ensuring the integrity of their work with digest verification.

Usage Scenario 4

Small businesses can utilize the tool for AI inferencing without investing in dedicated GPUs, making AI experimentation more affordable.

User Feedback

Local AI Playground is incredibly user-friendly and has allowed me to experiment with AI without needing a high-end GPU.

As a researcher, I appreciate the ability to manage multiple AI models efficiently with Local AI Playground's centralized system.

The tool's compact size and memory efficiency make it perfect for my limited hardware setup. Great for students and small businesses!

I've been able to quickly start AI inferencing sessions with just a few clicks. The interface is intuitive and the performance is impressive.

others

Local AI Playground stands out for its commitment to simplifying AI experimentation. The tool's design philosophy focuses on accessibility and ease of use, making it a popular choice among a diverse user base.

Useful Links

Below are the product-related links of Local AI Playground, I hope they are helpful to you.